Christina Shim, IBM’s Chief Sustainability Officer, discusses how to use AI effectively for sustainability and its potential impact on the ESG landscape.

AI is a top priority in the business plans of more than 80% of companies – but what could this mean for sustainability efforts?

Founded in 1911, IBM is a leader in AI and technology as a whole.

As IBM’s Chief Sustainability Officer, Christina Shim’s focus is on embedding sustainability into operations and everything the company builds at IBM.

She works with colleagues across the world to understand how the company can drive efficient operations, navigate AI’s energy needs and make hybrid cloud options more affordable and sustainable for both IBM and for its customers.

Her vision is to integrate sustainability so thoroughly into business practices that one day the CSO role is obsolete.

Christina shares her expertise with us.

What are IBM’s main sustainability goals?

Like most global businesses, IBM has a range of goals related to sustainability that involve energy-efficiency, renewable electricity, recycling and more. It’s also exciting to me that we treat IBM as “client zero” for many of the sustainability solutions that we ultimately bring to customers.

For example, IBM offers software that helps organisations right-size their cloud computing or reduce manufacturing waste, which help us drive impact beyond our own operations.

Similarly, we are doing a lot of great work on making AI more energy-efficient and cost effective. In one case at the University of Alabama Huntsville, it’s been estimated that using IBM Research’s Spyre AIU chip for a geospatial AI workload may save up to 23 kW per second, the equivalent of 20 US homes’ use per year and 85 tons of carbon emissions.

What do organisations need to consider when using AI?

Getting the most value out of AI requires using it in efficient, targeted and cost-effective ways.

That means using foundation models and tuning the smallest ones possible to meet your needs. Organisations should also be intentional about processing, using tools to minimise extra “headroom” and running workloads by renewable power sources whenever possible. And they should choose infrastructure specifically designed to efficiently run AI, which can dramatically improve performance.

Safety and governance are also enormously important.

This starts with rigorously vetting training data to eliminate harmful content and bias. At IBM, we’re so confident in how we do this that we disclose all datasets used to train our Granite models and indemnify our commercial users on watsonx. Businesses also need ways to manage, direct and monitor AI systems and models to ensure they stay within guardrails, comply with evolving regulations and continue to operate as intended. IBM supports this with its comprehensive watsonx.governance toolkit and Granite Guardian open-source safety LLMs, which help provide oversight and control of AI output.

How is IBM working to develop less resource intensive AI?

IBM is a full-stack operator when it comes to AI. We train, tune and deploy models, but also design and operate hardware, which helps us uncover co-optimisations that can significantly reduce energy demands. We’ve made use of this expertise in a few ways.

IBM’s new Granite 3.0 family of models include innovative techniques like speculative decoding that increase speed and efficiency and are available in various sizes, pre-trained with specific, relevant data, so organisations can use the smallest, most energy-efficient option that fits their needs.

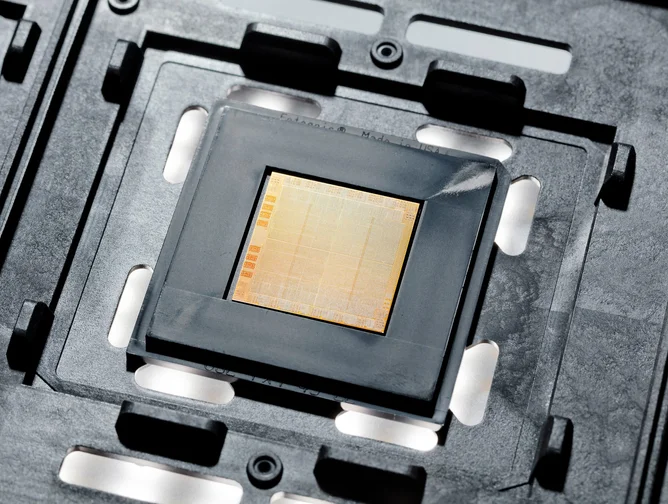

Our watsonx platform includes built-in observability, so users can measure their latency and throughput and optimise how they run their models. We have also launched the open-source InstructLab tuning method, which gives communities the tools to create and merge changes to LLMs without retraining the model from scratch. And IBM has released a number of impressive hardware innovations in this space, including our Telum II Processor and Spyre Accelerator, which are specifically designed to lower AI energy consumption.

How can ethics and governance support sustainable AI use?

Now is the moment for responsible AI, and that includes ensuring that we’re using it in a way that is solving important problems sustainably over the long term. IBM is a proud advocate for AI transparency, explainability and openness—traits that are critical in letting the broader AI community understand AI’s impact and best innovate for long-term success.

I sit on IBM’s AI ethics board, which is a diverse and multidisciplinary team responsible for the governance and decision-making process for AI ethics policies and practices. These are based on three principles that guide how we think about AI at IBM: the purpose of AI is to augment human intelligence, data and insights belong to their creator and new technologies like this must be transparent and explainable.

What advice would you give to organisations looking to use AI for sustainability?

There is no single solution.

Maximising AI’s value and sustainability comes from focusing on high-value use cases and making smart choices that reduce costs and impact at every step of the lifecycle: selecting small, efficient models tailored to the need; using hybrid cloud to have flexibility over where processing occurs and how that relates to energy costs; and adopting the most efficient AI-specific infrastructure.

If organisations can adopt some of these practical moves, AI can be a powerful tool in accelerating how quickly we solve problems, helping us understand and cope with climate and business risks and supporting our increasingly complex electric grids.