Nvidia’s new supercomputer offers enhanced Gen AI capabilities, including improved performance & lower cost, making advanced AI development more accessible.

As Gen AI continues to impact industries and create new possibilities, the need for accessible, powerful computing solutions is increasing along with AI demands.

The evolution of AI hardware has traditionally followed a path where capabilities were initially available only to large corporations and research institutions with access to substantial computing resources.

As a result, significant barriers have been created to entry for smaller organisations, independent developers and educational institutions seeking to innovate in the AI space.

The cost of AI development infrastructure, particularly for Gen AI applications, has also been a major limiting factor in the broader adoption and experimentation with these transformative technologies.

Against this backdrop, Nvidia has introduced a more affordable Gen AI supercomputer.

Nvidia has long led GPU technology and AI computing and has consistently pushed the boundaries of what’s possible in computational power.

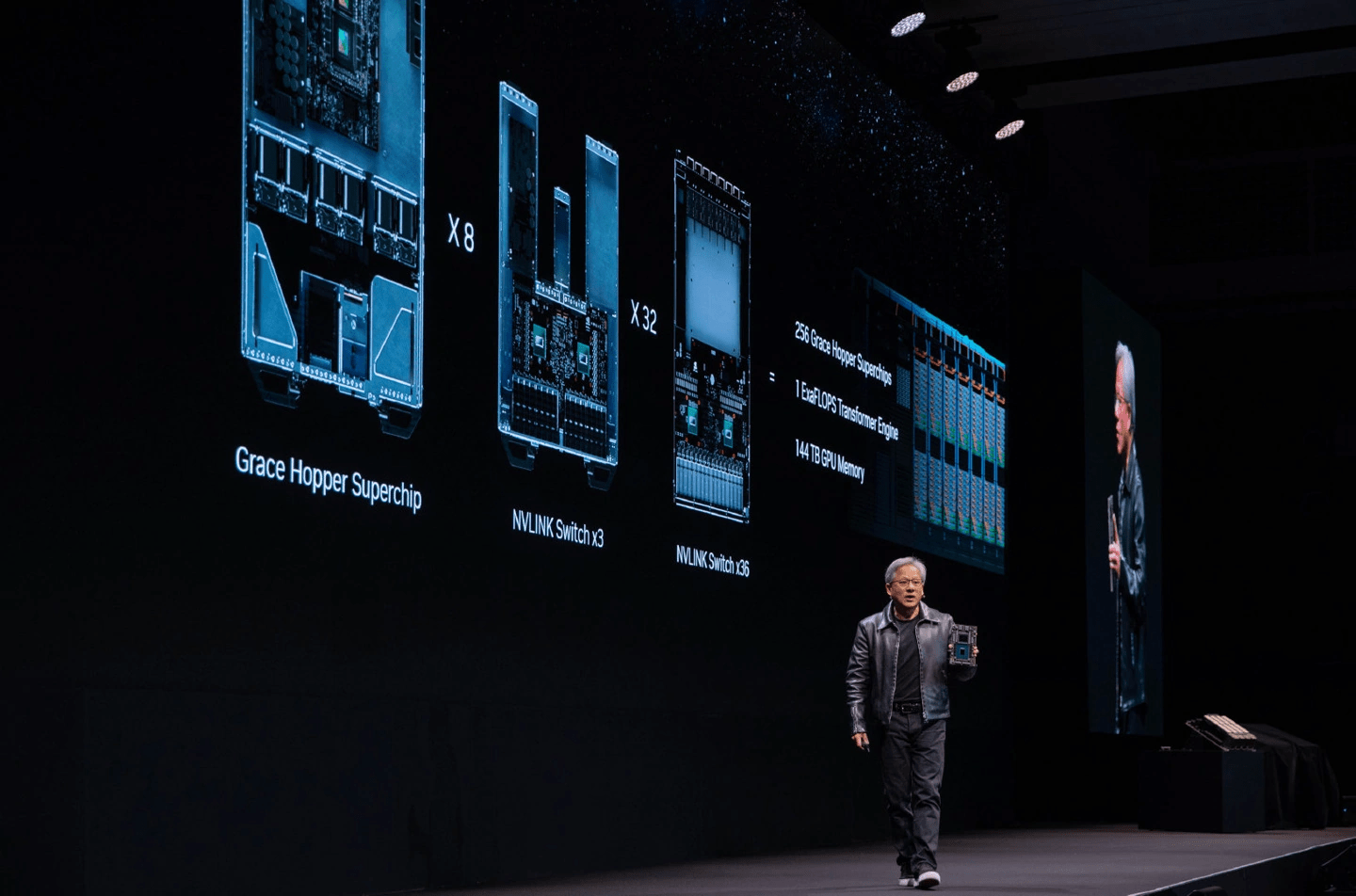

However, the company’s previous offerings in the AI hardware space – such as its HGX AI Supercomputing Platform – have typically been oriented towards enterprise-level customers with substantial budgets.

Therefore, the introduction of a more affordable Gen AI supercomputer introduces a potential shift in how developers and organisations can approach AI development and deployment.

Enhanced performance for Gen AI applications

The Jetson Orin Nano Super Developer Kit boasts significant improvements in Gen AI capabilities compared to its predecessor.

“This is a brand new Jetson Nano Super, offering almost 70 trillion operations per second, 25 Watts and US$249.” – CEO of Nvidia, Jensen Huang.

According to Nvidia, the device delivers up to a 1.7x increase in gen AI inference performance, a 70% boost in overall performance to 67 INT8 TOPS and a 50% increase in memory bandwidth to 102GB/s.

These enhancements make the device suitable for a range of applications, including:

- Creating LLM chatbots based on retrieval-augmented generation

- Building visual AI agents

- Deploying AI-based robots

The improved performance extends to popular Gen AI models and transformer-based computer vision applications.

Accessible platform for AI development

The Jetson Orin Nano Super Developer Kit consists of a Jetson Orin Nano 8GB system-on-module (SoM) and a reference carrier board.

This configuration provides an ideal platform for prototyping edge AI applications.

Key features of the SoM include:

- An Nvidia Ampere architecture GPU with tensor cores

- A 6-core Arm CPU

- Support for up to four cameras, offering higher resolution and frame rates than previous versions

These capabilities enable the device to support multiple concurrent AI application pipelines and high-performance inference.

Comprehensive software ecosystem and community support

Nvidia has developed an extensive software ecosystem to support developers working with the Jetson Orin Nano Super.

The Nvidia Jetson AI lab offers immediate support for models from the open-source community and provides easy-to-use tutorials.

The device runs Nvidia AI software, including:

- Nvidia Isaac for robotics

- Nvidia Metropolis for vision AI

- Nvidia Holoscan for sensor processing

Developers can also leverage tools such as Nvidia Omniverse Replicator for synthetic data generation and Nvidia TAO Toolkit for fine-tuning pretrained AI models from the NGC catalog.

Additionally, Jetson ecosystem partners offer supplementary AI and system software, developer tools and custom software development services.

They can also assist with cameras, sensors and carrier boards for product solutions.

Jensen Huang, the CEO of Nvidia, summarises what he’s excited about with the new gadget: “This is a brand new Jetson Nano Super, offering almost 70 trillion operations per second, 25 Watts and US$249. It runs everything that the HGX does – it even runs LLMs and I can’t wait for all of you to try it. It’s available everywhere.”